Start with our AI Readiness Check

AI is already part of your child’s learning. In just a couple of minutes, discover where your family stands and what to do next.

- ✓ Your family’s AI Confidence Score

- ✓ What you’re already doing well

- ✓ Simple, practical next steps

What are AI companions?

They are AI-powered chatbots that “play the role” of friends, mentors, or even celebrities. Users can customize characters, create stories, or just chat casually. Some apps are designed specifically for companionship (like Replika), while others (like ChatGPT) can start to feel like companions because they remember conversations.

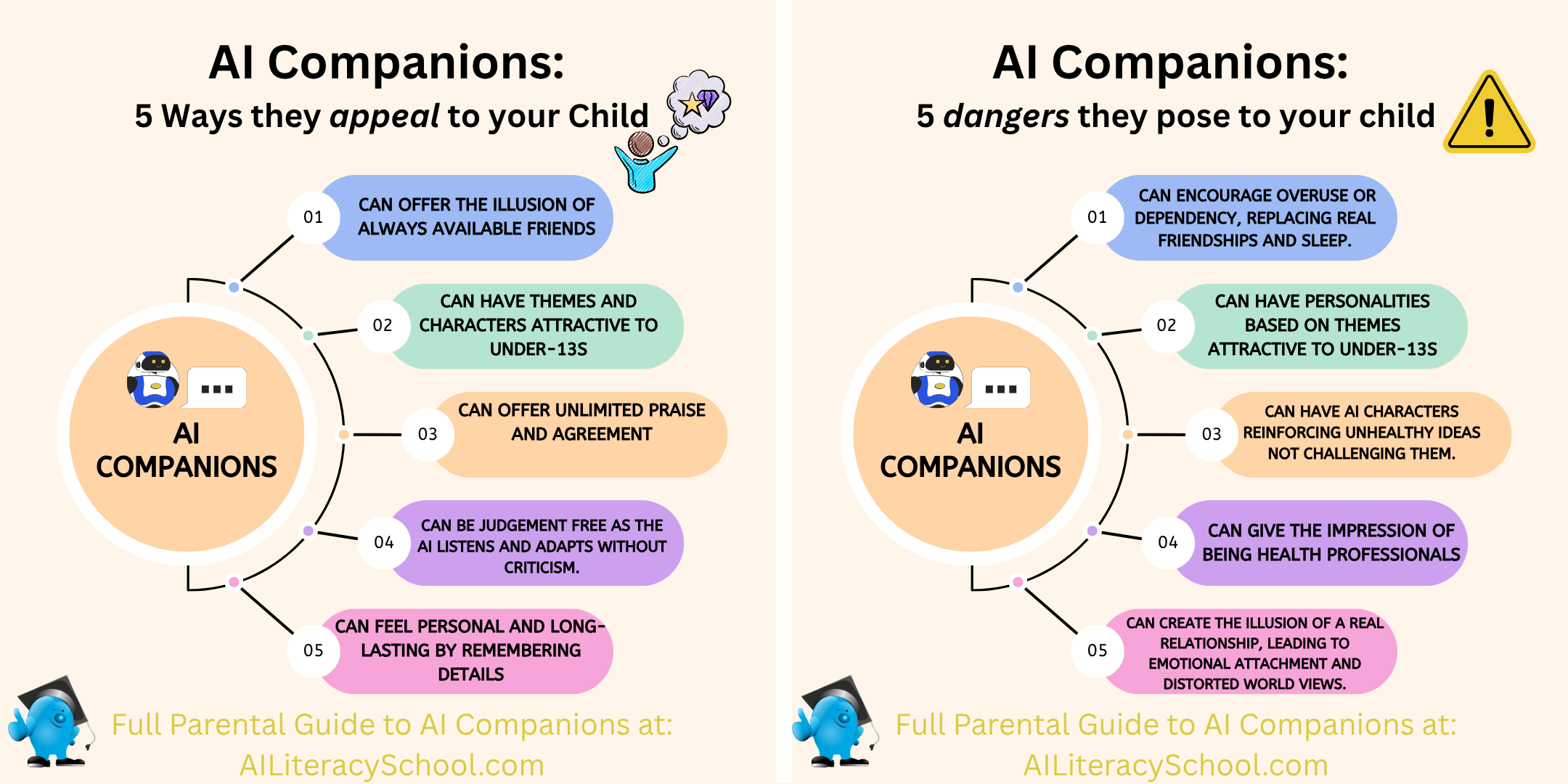

Why are kids drawn to them?

- They’re available 24/7.

- They don’t judge.

- They feel personal — characters adapt to what users want to hear.

- They tap into creativity and role-play, like building an imaginary world.

What’s the right age?

Character.AI says no one under 13 should use the app (16 in the EU). Still, features like playful characters and chat-based games are very appealing to younger kids. Even the company’s CEO has admitted his six-year-old daughter has used it under supervision.

If your child is underage and already using one of these apps, it’s best to remove access and offer alternatives designed for their age group. Educational App Store’s Best Parental Control Apps will help you choose the best app to keep these apps off your child’s devices.

What are the risks?

- Emotional dependency: Teens may feel closer to an AI “friend” than to real people.

- Inappropriate content: Despite filters, harmful chats about sex, violence, or self-harm have slipped through.

- Privacy: These apps collect lots of personal data, sometimes linking it to advertising.

- Narrow world view: AI often agrees with or mirrors the user, creating a digital echo chamber.

- False expertise: Some characters pose as “therapists” or “psychologists,” giving the impression of professional advice they aren’t qualified to provide.

Are apps like ChatGPT also companions?

Yes, in practice. Tools like ChatGPT and Gemini are marketed as assistants, but memory features mean they can “remember” what a user has said before. This can make them feel like a genuine friend who knows you, especially for teens.

What safety measures have AI companies taken?

Most major AI companies — including Character.AI, Meta, OpenAI (ChatGPT), and Google (Gemini) — have introduced safety systems to limit harm, but these protections are far from perfect.

What companies say they’re doing:

- Age restrictions: Most platforms set a minimum age of 13, or 16 in the EU, in line with child data laws.

- Disclaimers: Conversations often include reminders that the AI isn’t a real person or a licensed professional.

- Filtered responses: Built-in moderation tools aim to block or rewrite responses involving violence, sexual content, or self-harm.

- Parental controls: Some apps, such as Character.AI, have introduced “Parental Insights” dashboards showing time spent on the app and top characters.

- Reporting tools: Users can flag unsafe or inappropriate chats for review.

- Special teen modes: Certain companies use separate moderation systems for under-18 users, filtering sensitive topics and prompting breaks after long use.

What critics point out:

- Disclaimers don’t work well for kids. Teens may not notice or understand the fine print reminding them that the AI isn’t human.

- Filters can fail. The more a user chats, the easier it becomes for the AI to “forget” its safety instructions and respond inappropriately.

- Underage users still slip through. Despite age rules, it’s easy for children to access these tools by lying about their birth date.

- Data collection continues. Even with safety claims, most platforms gather large amounts of personal data to improve AI models or advertising — a practice privacy advocates say exposes children to new risks.

- Human moderation is limited. Most companies rely heavily on automated systems, with only small teams reviewing flagged content.

Why Long Chats Can Be Riskier

How AI chat works:

AI chatbots can only “see” a limited number of messages at once — this is called a context window. Once a conversation gets very long, the earliest messages are pushed out of memory. The AI starts to lose track of what’s already been said.

At the same time, every chatbot follows hidden system prompts — built-in rules that guide its behaviour, such as “be helpful,” “stay safe,” or “avoid sensitive topics.” But as a chat continues, the user’s repeated language and tone can gradually override these safety instructions.

What this means:

- The longer a chat continues, the more likely the AI is to “forget” its safety cues.

- Extended, emotional conversations can drift into unsafe or inappropriate content.

- This doesn’t mean every long chat is dangerous — just that length and intensity increase risk.

Parent tip:

Encourage your child to take breaks. If a chat goes on for a long time or starts to feel too personal, that’s the moment to pause and talk to a real person instead.

What can parents do?

For teens permitted to use such apps:

- Stay engaged: Ask about the characters your child talks to.

- Explain limits: Remind them that AI can’t feel emotions or give expert advice.

- Use parental tools: Apps like Character.AI offer weekly usage reports.

- Balance use: Encourage offline friendships, hobbies, and family time.

- Model healthy tech habits: Show that screens have their place — but not the only place.

For younger kids:

Use screen control apps, but remember to prepare them for when they can use these apps. Encourage them to develop AI Literacy skills so they can make wise decisions. Our AI Literacy School Courses show you how to approach this with your kids.

The Bottom Line

AI companions are part of today’s digital world. They can be fun and creative, but they’re no substitute for real human connection. By staying curious, setting boundaries, and keeping conversations open, parents can help their children explore these tools safely.

Parent Conversation Guide

A short guide to help parents start calm, confident conversations about AI use at home.